Summary

- DeepSeek is open-source and performs as well as top AI models, trained for much less

- Developed by Chinese engineers, it can compress complex models and run locally on lower end hardware.

- Concerns exist about its origins and potential biases.

The internet is abuzz with the name “DeepSeek”. AI companies like OpenAI and NVIDIA are seeing their stock prices tumble, and AI enthusiasts are rubbing their hands together with glee all over the world. Why is this new entrant to the AI world such a big deal?

DeepSeek Is an Open-Source AI With Big Claims

Like GPT-o1 or Claude, or Llama, or any of the current AI darlings, DeepSeek is a generative AI model. Well, it’s more accurate to say that it’s a group of AI models which are variants designed for different applications.

Unlike, for example, OpenAI’s GPT models, DeepSeek is open source under the MIT license, which allows for commercial use. This means that the entire internal workings of the model are open to see. Anyone can use it without paying licensing fees of any kind, and there’s nothing stopping someone from modifying or building on the work that’s already been done. This is one of the key reasons DeepSeek has led to a short-term market disruption, which might turn into a long-term market correction.

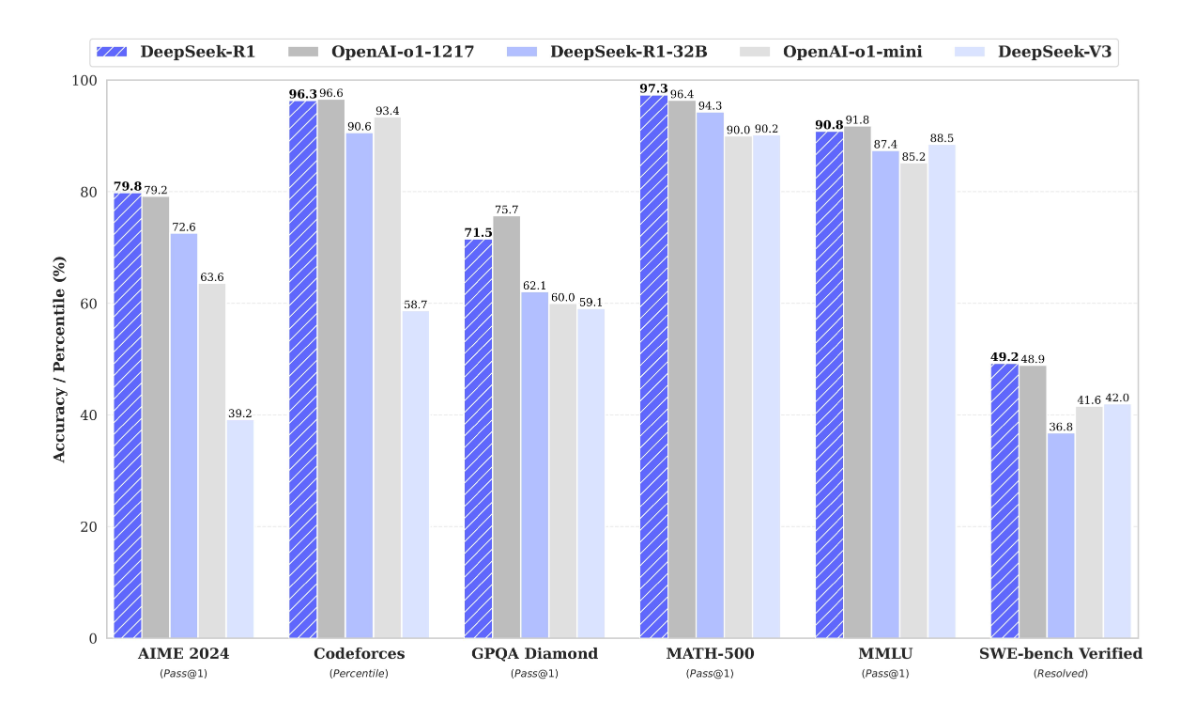

However, none of this really matters if DeepSeek isn’t any good. The other major factor that has everyone talking about this AI model is how well it performs. In AI benchmarks, DeepSeek performs as well as GPT-o1 and other premiere generative models. Even better in some cases. This is, of course, something anyone can verify, but the truly shocking claim is how much it cost to develop this model.

DeepSeek claims the model was trained for less than six million dollars. Which sounds like a lot of money until you consider that the models it’s trading blows with cost in excess of a hundred million dollars to train. Not only that, but DeepSeek was trained on less powerful hardware than what’s available to US companies like OpenAI. Of course, this is one of the claims that isn’t so easily verified, and it is possible that the quoted amount is much less than the truth. However, for now there’s no smoking gun to indicate that the costs were any higher than claimed.

The last “big deal” I think is worth mentioning about DeepSeek is how it’s been used to “distill” large, dense, and computationally expensive models like Llama into smaller models with comparable reasoning abilities. Basically, DeepSeek trains a model off larger more complex models to mimic its outputs, without all the complex machinations under the hood. It’s effectively compressing larger models into smaller ones with (so far) few apparent downsides. This is a big leap for running complex models locally, using less power, and needing less hardware.

DeepSeek Was Developed by Chinese Engineers

DeepSeek is a startup led by Liang Wenfeng (39) who first saw success as a hedge-fund manager. Specifically, a quantitative hedge fund manager. This is an approach to investment that uses machine learning to predict market trends so that investors can profit from it. This is why Wenfeng already had access to the powerful hardware needed to train such a model.

DeepSeek seems to be what amounts to a passion project, and isn’t looking to make it a for-profit endeavor. Indeed, the model has already been given away to everyone, being open source. Ironically, the US embargoes on powerful AI chips from companies like NVIDIA, might have been part of the reason DeepSeek’s developers were forced to make it so efficient.

You Can Try DeepSeek Right Now

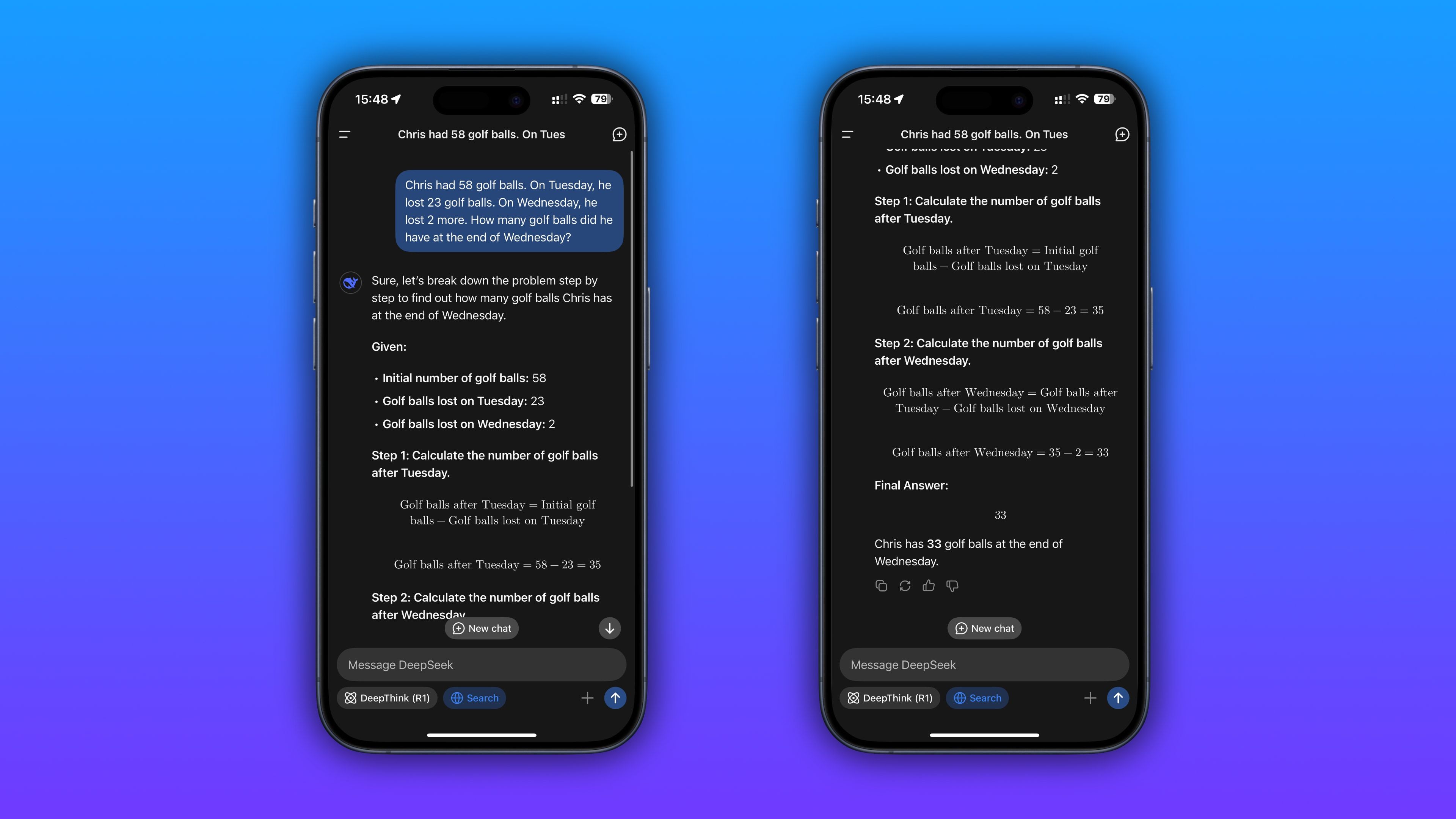

If you visit the DeepSeek website you can get access to the app as well as links to the actual models to download and use on your own hardware. The easiest way to run some version of DeepSeek on your own hardware is by using Ollama.

Of course, you’re not going to run the GPT-o1 level DeepSeek model on your laptop anytime soon, but for a few thousand dollars’ worth of high-end GPUs and RAM, it’s totally possible. There are, of course, many smaller DeepSeek models that aren’t quite as good, but will run well on the computer you probably have now. Heck, there’s even a version that will run (just) on a Raspberry Pi.

There Are Some Special Concerns With DeepSeek

Considering how parts of the DeepSeek story may seem too good to be true to some pundits in the AI industry, and that it originates in China, which raises concerns about bias, censorship and even cybersecurity, it’s no surprise that there’s some hesitance about DeepSeek.

Indeed, ask the online hosted version of the LLM questions that are sensitive to the current Chinese government, and it might not be as forthcoming as you might expect. However, since the code is open to all, anyone can adjust how it behaves if they have the knowledge. These concerns can be addressed in principle.

It’s early days for DeepSeek, and it will take some time to see how things will shake out, but one thing I have no doubt about is that the generative AI industry has just undergone its first major paradigm shift since ChatGPT first launched to the public.